Process Scheduling Program In C

- Fcfs Scheduling Program In C With Arrival Time And Gantt Chart

- Process Scheduling Program In C Format

- Process Scheduling Program In China

Operating Systems: CPU SchedulingCPU SchedulingReferences:. Abraham Silberschatz, Greg Gagne, and Peter Baer Galvin, 'Operating System Concepts, Ninth Edition ', Chapter 66.1 Basic Concepts. Almost all programs have some alternating cycle of CPU number crunching and waiting for I/O of some kind. 3. In the first Gantt chart below, process P1 arrives first.

The average waiting time for the three processes is ( 0 + 24 + 27 ) / 3 = 17.0 ms. In the second Gantt chart below, the same three processes have an average wait time of ( 0 + 3 + 6 ) / 3 = 3.0 ms. The total run time for the three bursts is the same, but in the second case two of the three finish much quicker, and the other process is only delayed by a short amount. FCFS can also block the system in a busy dynamic system in another way, known as the convoy effect.

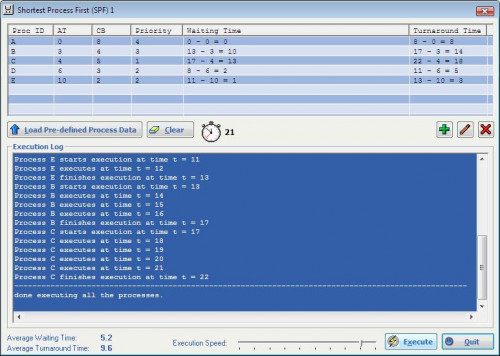

When one CPU intensive process blocks the CPU, a number of I/O intensive processes can get backed up behind it, leaving the I/O devices idle. 3. In the case above the average wait time is ( 0 + 3 + 9 + 16 ) / 4 = 7.0 ms, ( as opposed to 10.25 ms for FCFS for the same processes.

). SJF can be proven to be the fastest scheduling algorithm, but it suffers from one important problem: How do you know how long the next CPU burst is going to be?. For long-term batch jobs this can be done based upon the limits that users set for their jobs when they submit them, which encourages them to set low limits, but risks their having to re-submit the job if they set the limit too low. However that does not work for short-term CPU scheduling on an interactive system. Another option would be to statistically measure the run time characteristics of jobs, particularly if the same tasks are run repeatedly and predictably.

But once again that really isn't a viable option for short term CPU scheduling in the real world. A more practical approach is to predict the length of the next burst, based on some historical measurement of recent burst times for this process.

One simple, fast, and relatively accurate method is the exponential average, which can be defined as follows. ( The book uses tau and t for their variables, but those are hard to distinguish from one another and don't work well in HTML. )estimate i + 1 = alpha. burst i + ( 1.0 - alpha ). estimate i. In this scheme the previous estimate contains the history of all previous times, and alpha serves as a weighting factor for the relative importance of recent data versus past history. If alpha is 1.0, then past history is ignored, and we assume the next burst will be the same length as the last burst.

If alpha is 0.0, then all measured burst times are ignored, and we just assume a constant burst time. Most commonly alpha is set at 0.5, as illustrated in Figure 5.3:Figure 6.3 - Prediction of the length of the next CPU burst. SJF can be either preemptive or non-preemptive.

Preemption occurs when a new process arrives in the ready queue that has a predicted burst time shorter than the time remaining in the process whose burst is currently on the CPU. Preemptive SJF is sometimes referred to as shortest remaining time first scheduling. For example, the following Gantt chart is based upon the following data:ProcessArrival TimeBurst Time. 5. The average wait time in this case is ( ( 5 - 3 ) + ( 10 - 1 ) + ( 17 - 2 ) ) / 4 = 26 / 4 = 6.5 ms.

( As opposed to 7.75 ms for non-preemptive SJF or 8.75 for FCFS. )6.3.3 Priority Scheduling. Priority scheduling is a more general case of SJF, in which each job is assigned a priority and the job with the highest priority gets scheduled first.

( SJF uses the inverse of the next expected burst time as its priority - The smaller the expected burst, the higher the priority. ). Note that in practice, priorities are implemented using integers within a fixed range, but there is no agreed-upon convention as to whether 'high' priorities use large numbers or small numbers. This book uses low number for high priorities, with 0 being the highest possible priority.

For example, the following Gantt chart is based upon these process burst times and priorities, and yields an average waiting time of 8.2 ms:ProcessBurst TimePriority. 2.

Priorities can be assigned either internally or externally. Internal priorities are assigned by the OS using criteria such as average burst time, ratio of CPU to I/O activity, system resource use, and other factors available to the kernel. External priorities are assigned by users, based on the importance of the job, fees paid, politics, etc. Priority scheduling can be either preemptive or non-preemptive. Priority scheduling can suffer from a major problem known as indefinite blocking, or starvation, in which a low-priority task can wait forever because there are always some other jobs around that have higher priority. If this problem is allowed to occur, then processes will either run eventually when the system load lightens ( at say 2:00 a.m.

), or will eventually get lost when the system is shut down or crashes. ( There are rumors of jobs that have been stuck for years. ).

One common solution to this problem is aging, in which priorities of jobs increase the longer they wait. 3. The performance of RR is sensitive to the time quantum selected.

If the quantum is large enough, then RR reduces to the FCFS algorithm; If it is very small, then each process gets 1/nth of the processor time and share the CPU equally. BUT, a real system invokes overhead for every context switch, and the smaller the time quantum the more context switches there are. ( See Figure 6.4 below.

) Most modern systems use time quantum between 10 and 100 milliseconds, and context switch times on the order of 10 microseconds, so the overhead is small relative to the time quantum.Figure 6.4 - The way in which a smaller time quantum increases context switches. Turn around time also varies with quantum time, in a non-apparent manner. Consider, for example the processes shown in Figure 6.5:Figure 6.5 - The way in which turnaround time varies with the time quantum.

Fcfs Scheduling Program In C With Arrival Time And Gantt Chart

In general, turnaround time is minimized if most processes finish their next cpu burst within one time quantum. For example, with three processes of 10 ms bursts each, the average turnaround time for 1 ms quantum is 29, and for 10 ms quantum it reduces to 20. However, if it is made too large, then RR just degenerates to FCFS. A rule of thumb is that 80% of CPU bursts should be smaller than the time quantum.6.3.5 Multilevel Queue Scheduling.

When processes can be readily categorized, then multiple separate queues can be established, each implementing whatever scheduling algorithm is most appropriate for that type of job, and/or with different parametric adjustments. Scheduling must also be done between queues, that is scheduling one queue to get time relative to other queues. Two common options are strict priority ( no job in a lower priority queue runs until all higher priority queues are empty ) and round-robin ( each queue gets a time slice in turn, possibly of different sizes. CFS ( Completely Fair Scheduler ) PerformanceThe Linux CFS scheduler provides an efficient algorithm for selecting whichtask to run next. Each runnable task is placed in a red-black tree—a balancedbinary search tree whose key is based on the value of vruntime. This tree isshown below:When a task becomes runnable, it is added to the tree. If a task on thetree is not runnable ( for example, if it is blocked while waiting for I/O ), it isremoved.

Process Scheduling Program In C Format

Generally speaking, tasks that have been given less processing time( smaller values of vruntime ) are toward the left side of the tree, and tasksthat have been given more processing time are on the right side. Accordingto the properties of a binary search tree, the leftmost node has the smallestkey value, which for the sake of the CFS scheduler means that it is the taskwith the highest priority. Because the red-black tree is balanced, navigatingit to discover the leftmost node will require O(lgN) operations (where Nis the number of nodes in the tree).

However, for efficiency reasons, theLinux scheduler caches this value in the variable rbleftmost, and thusdetermining which task to run next requires only retrieving the cached value.New sidebar in ninth edition. The Linux scheduler is a preemptive priority-based algorithm with two priority ranges - Real time from 0 to 99 and a nice range from 100 to 140. Unlike Solaris or XP, Linux assigns longer time quantums to higher priority tasks.Figure 6.21 - Scheduling priorities on a Linux system.Replaced by Figure 6.21 in the ninth edition. Was Figure 5.15 in eighth edition /.

The Following material was omitted from the ninth edition:. A runnable task is considered eligible for execution as long as it has not consumed all the time available in it's time slice.

Those tasks are stored in an active array, indexed according to priority. When a process consumes its time slice, it is moved to an expired array. The tasks priority may be re-assigned as part of the transferal. When the active array becomes empty, the two arrays are swapped.

These arrays are stored in runqueue structures. On multiprocessor machines, each processor has its own scheduler with its own runqueue.Omitted from ninth edition. Figure 5.16in eighth edition./ 6.7.2 Example: Windows XP Scheduling ( was 5.6.2 ). Windows XP uses a priority-based preemptive scheduling algorithm. The dispatcher uses a 32-level priority scheme to determine the order of thread execution, divided into two classes - variable class from 1 to 15 and real-time class from 16 to 31, ( plus a thread at priority 0 managing memory. ). There is also a special idle thread that is scheduled when no other threads are ready.

Win XP identifies 7 priority classes ( rows on the table below ), and 6 relative priorities within each class ( columns. ). Processes are also each given a base priority within their priority class. When variable class processes consume their entire time quanta, then their priority gets lowered, but not below their base priority.

Processes in the foreground ( active window ) have their scheduling quanta multiplied by 3, to give better response to interactive processes in the foreground.Figure 6.22 - Windows thread priorities. 6.7.1 Example: Solaris Scheduling. Priority-based kernel thread scheduling. Four classes ( real-time, system, interactive, and time-sharing ), and multiple queues / algorithms within each class.

Default is time-sharing. Process priorities and time slices are adjusted dynamically in a multilevel-feedback priority queue system. Time slices are inversely proportional to priority - Higher priority jobs get smaller time slices. Interactive jobs have higher priority than CPU-Bound ones. See the table below for some of the 60 priority levels and how they shift.

'Time quantum expired' and 'return from sleep' indicate the new priority when those events occur. ( Larger numbers are a higher, i.e. Better priority.

)Figure 6.23 - Solaris dispatch table for time-sharing and interactive threads. Solaris 9 introduced two new scheduling classes: Fixed priority and fair share. Fixed priority is similar to time sharing, but not adjusted dynamically. Fair share uses shares of CPU time rather than priorities to schedule jobs. A certain share of the available CPU time is allocated to a project, which is a set of processes.

Process Scheduling Program In China

System class is reserved for kernel use. ( User programs running in kernel mode are NOT considered in the system scheduling class. )Figure 6.24 - Solaris scheduling. 6.8 Algorithm Evaluation. The first step in determining which algorithm ( and what parameter settings within that algorithm ) is optimal for a particular operating environment is to determine what criteria are to be used, what goals are to be targeted, and what constraints if any must be applied.

For example, one might want to 'maximize CPU utilization, subject to a maximum response time of 1 second'. Once criteria have been established, then different algorithms can be analyzed and a 'best choice' determined. The following sections outline some different methods for determining the 'best choice'.6.8.1 Deterministic Modeling. If a specific workload is known, then the exact values for major criteria can be fairly easily calculated, and the 'best' determined. For example, consider the following workload ( with all processes arriving at time 0 ), and the resulting schedules determined by three different algorithms:ProcessBurst Time. FCFS:Non-preemptive SJF:Round Robin:.

The average waiting times for FCFS, SJF, and RR are 28ms, 13ms, and 23ms respectively. Deterministic modeling is fast and easy, but it requires specific known input, and the results only apply for that particular set of input. However by examining multiple similar cases, certain trends can be observed. ( Like the fact that for processes arriving at the same time, SJF will always yield the shortest average wait time.

)6.8.2 Queuing Models. Specific process data is often not available, particularly for future times. However a study of historical performance can often produce statistical descriptions of certain important parameters, such as the rate at which new processes arrive, the ratio of CPU bursts to I/O times, the distribution of CPU burst times and I/O burst times, etc.

Armed with those probability distributions and some mathematical formulas, it is possible to calculate certain performance characteristics of individual waiting queues. For example, Little's Formula says that for an average queue length of N, with an average waiting time in the queue of W, and an average arrival of new jobs in the queue of Lambda, then these three terms can be related by:N = Lambda. W. Queuing models treat the computer as a network of interconnected queues, each of which is described by its probability distribution statistics and formulas such as Little's formula.